Day-Night Cycle

The day/night period in Minecraft 1.5.2 is a time period of 20 minutes. This segment in the game symbolizes a normal day, which in this case is just shorter than the real day.

Your Step-by-Step Manual: How to Reload Chunks in Minecraft

In the sprawling universe of Minecraft, where landscapes are crafted, secrets are unearthed, and adventures unfold, lies the enigmatic concept of chunk reloads. These arcane maneuvers hold the power to reshape the terrain, address glitches, and breathe new life into your world. This guide serves as your portal to the art of chunk reloads, providing […]

What to Feed Fish in Minecraft

In Minecraft, you’re the architect, the explorer, and the adventurer, shaping the landscape, building structures, and navigating through a vast world. The game’s ecosystem is a masterful blend of vibrant landscapes, diverse biomes, and an array of passive and hostile creatures. One fascinating aspect of this ecosystem is the aquatic life, where various fish species […]

Unraveling How to Put Out Campfire Minecraft

In the captivating world of Minecraft, where creativity knows no bounds, the dancing flames of a campfire often hold both functional and aesthetic appeal. But what happens when you need to tame these fiery elements and extinguish the warmth they emit? This article beckons you to embrace the art of fire manipulation as we unveil […]

Latest

Cheats for Minecraft: Unlocking the Full Gaming Potential

Hey there, fellow gamers! Ever wondered how to truly maximize your gaming experience in the pixelated wonderland of Minecraft? From creating magical worlds to battling monstrous creatures, the game can sometimes feel like an infinite maze. Well, guess what? There’s a secret: cheats! But before we delve into the magical world of Minecraft cheats, let’s […]

Cheats for Minecraft

Minecraft cheats have been created specifically so that the player can overcome difficulties and any obstacles that may arise in the game.

Our Partners

Cheats for Minecraft: Unlocking the Full Gaming Potential

Hey there, fellow gamers! Ever wondered how to truly maximize your gaming experience in the pixelated wonderland of Minecraft? From creating magical worlds to battling monstrous creatures, the game can sometimes feel like an infinite maze. Well, guess what? There’s a secret: cheats! But before we delve into the magical world of Minecraft cheats, let’s […]

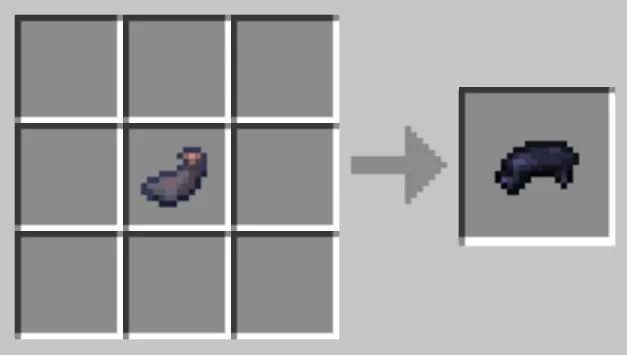

How to Make a Repeater

You need three cobblestones, which should be burnt in the oven. They are used to make a block of stone.

Maps to Minecraft

If you like big bright worlds, the action of which unfolds on vast locations, you can install the map “Fantasy Landscape”.

Constructing the Perfect Chestplate in Minecraft

Dive into the fascinating world of Minecraft, a world where crafting and survival go hand in hand. One of the essential protective gears in this pixelated universe is the chestplate, offering players significant defense bonuses against hostile mobs. Let’s delve into the intricacies of crafting and using these vital protective pieces. Understanding the Role of […]

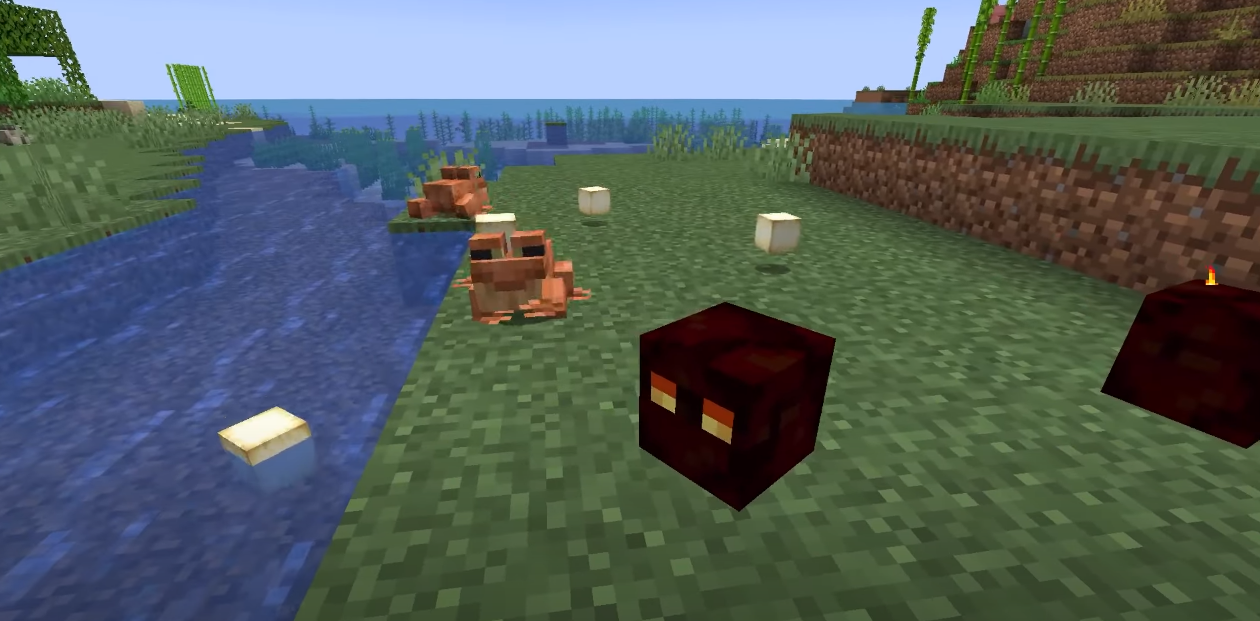

A Complete Guide to Crafting Frog Lights in Minecraft

In Minecraft, creativity knows no bounds, and the immense sandbox world allows players to create all kinds of unique items and structures. While frog lights are not part of the official game, the crafting system provides ample opportunities to invent and build anything you can imagine. This guide will walk you through the steps to […]

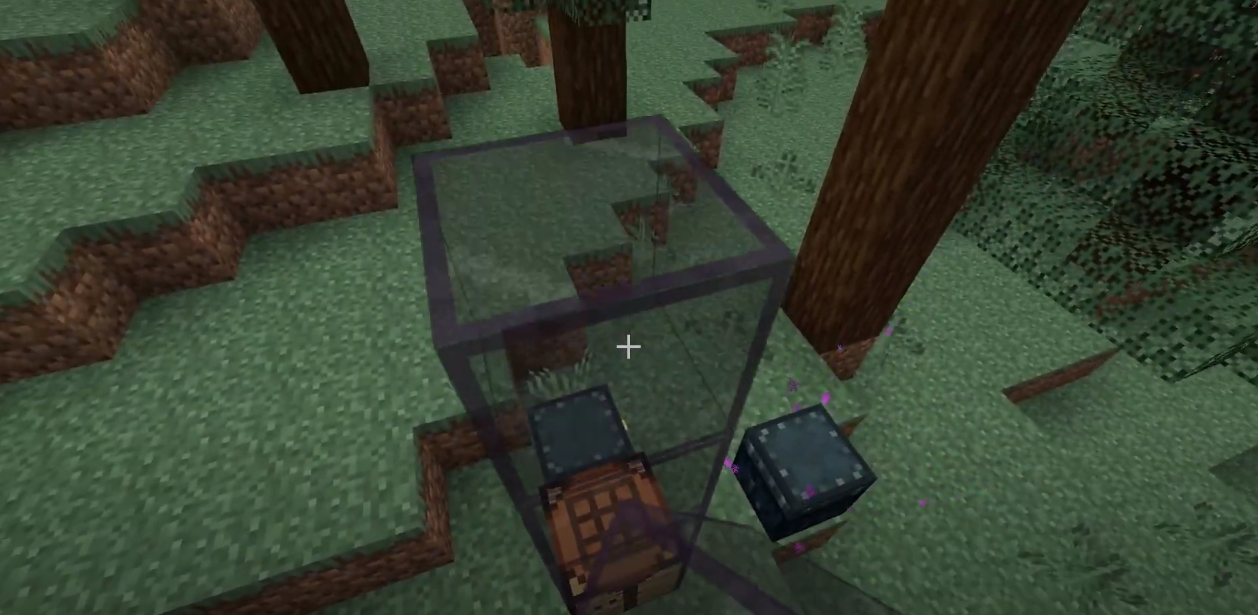

Creating Tinted Glass in Minecraft: A Comprehensive Guide

Minecraft, a world of endless creativity and exploration, invites players to transform their virtual landscapes into captivating masterpieces. One way to elevate your constructions is by incorporating tinted glass, an ingenious decorative element that infuses color and sophistication into your builds. This article delves deep into the process of crafting tinted glass in Minecraft, providing […]

Your Step-by-Step Manual: How to Reload Chunks in Minecraft

In the sprawling universe of Minecraft, where landscapes are crafted, secrets are unearthed, and adventures unfold, lies the enigmatic concept of chunk reloads. These arcane maneuvers hold the power to reshape the terrain, address glitches, and breathe new life into your world. This guide serves as your portal to the art of chunk reloads, providing […]

What to Feed Fish in Minecraft

In Minecraft, you’re the architect, the explorer, and the adventurer, shaping the landscape, building structures, and navigating through a vast world. The game’s ecosystem is a masterful blend of vibrant landscapes, diverse biomes, and an array of passive and hostile creatures. One fascinating aspect of this ecosystem is the aquatic life, where various fish species […]

Unraveling How to Put Out Campfire Minecraft

In the captivating world of Minecraft, where creativity knows no bounds, the dancing flames of a campfire often hold both functional and aesthetic appeal. But what happens when you need to tame these fiery elements and extinguish the warmth they emit? This article beckons you to embrace the art of fire manipulation as we unveil […]